Subscriber Benefit

As a subscriber you can listen to articles at work, in the car, or while you work out. Subscribe NowAs new vehicle models are released each year, automated driving technologies become increasingly available to consumers. Functions that warn drivers if they drift into adjacent lanes and automatic braking are just a few assistance features aimed to help drivers be safer on the road.

While they’re meant to enhance the safety and convenience of driving, automated driving technologies aren’t replacing human drivers — yet.

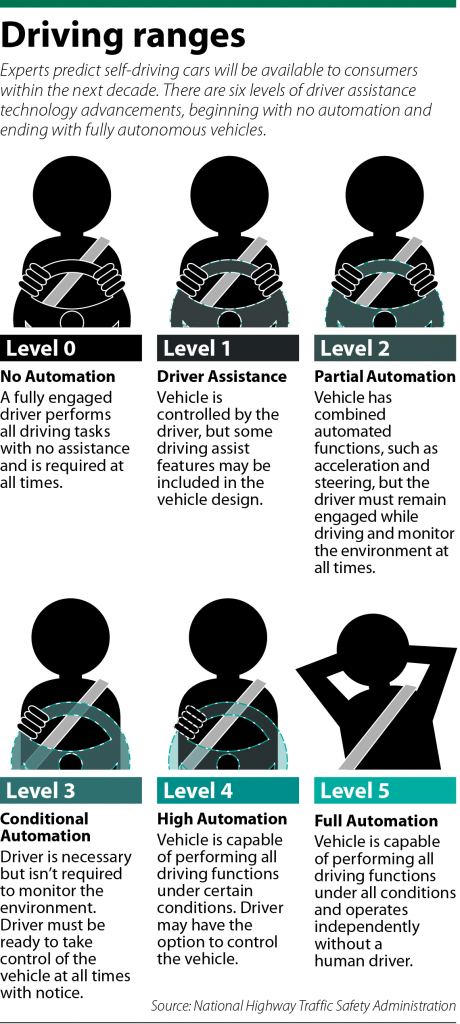

Fully automated “self-driving” vehicles won’t be available to consumers and integrated on U.S. roadways for many years to come, but experts anticipate the addition will take place through six levels of driver-assistance technology advancements.

Advanced driver assistance features began appearing in consumer vehicles more frequently between 2010 and 2016, according to the National Highway Traffic Safety Administration, including review video systems and lane-centering assistance. Vehicles today have additional assist options such as self-parking, lane-keeping assistance and adaptive cruise control.

After 2025, the NHTSA says it expects to see fully automated safety features that enable vehicles to operate independently without a human driver. But questions about these new technologies are still unanswered, leaving policymakers, government leaders and attorneys to fill in the blanks.

Legal considerations

Automation generally will touch every aspect of the law, according to University of South Carolina law professor and automated driving expert Bryant Walker Smith.

Criminal law, product liability, cybersecurity, insurance and other areas of law must be considered with advances in self-driving technology.

“There is a saying in law that says, ‘When nothing is relevant, everything is relevant.’ That’s true of automated driving. Just because there isn’t a law for automated driving doesn’t mean that there isn’t a background law that will interact with it,” Walker-Smith said.

As automation increases, one consideration will be who or what is the driver of an automated vehicle in both technical and legal terms. “So if the human driver is going away or they are playing less of a role, who or what is the driver? Is it the company, the vehicle, some other person?” Walker Smith asked.

Another area of confusion could be found with drunken driving cases, said Michigan State University law professor and automated driving expert Nicholas Wittner. When higher-level automated driving vehicles are available in the future, if an individual is intoxicated and boards a Level 4 vehicle to take them home, under current law, who would be the driver?

“Most laws that touch on vehicles will need to change. And there will need to be a lot of policy decisions made if, for example, you did receive a DUI under current law,” Wittner said. “You won’t have to change all laws, but you would have to change a lot of them.”

“Most laws that touch on vehicles will need to change. And there will need to be a lot of policy decisions made if, for example, you did receive a DUI under current law,” Wittner said. “You won’t have to change all laws, but you would have to change a lot of them.”

Precise language

An important aspect to keep in mind with automated driving technologies is how to reference them with accurate terminology, said Wittner. Descriptions of automated systems matter because users could misuse or misunderstand the technology depending on how it is marketed.

“An autonomous vehicle means it can do whatever it wants. For example, you get in it and you want to go to the mountains, and it decides it wants to go the beach. That would make it fully autonomous,” Wittner said.

Another example is Tesla’s use of the term “Autopilot” to describe its semi-automated system, which is a misrepresentation of what the functions can actually do, Wittner said. That can be dangerous for drivers who don’t understand how the vehicles actually function.

Wittner said Tesla is currently facing several product-liability suits for its Level 2 vehicles. He anticipates product liability will be the number one issue to arise in courts once Level 3 and Level 4 vehicles are more commonly available to consumers.

One of those lawsuits is a class-action filed in California by the survivors of Indianapolis Case Pacer legal startup entrepreneur Kevin McCarthy, who was killed in a Tesla crash in November 2016. The suit asserts the automobile’s defects, among them its “uncommanded acceleration,” led to McCarthy’s death after his Tesla S wrecked on Illinois Street in downtown Indianapolis.

“Things will go wrong. Nothing is perfect,” Wittner said.

Getting comfortable

As automated vehicles become more prevalent, automated driving expert Jeff Gurney said attorneys will need to familiarize themselves with the evolving technology to be equipped for future cases.

Lawyers should first learn the lingo and understand how the vehicles actually operate from a technological standpoint, Gurney advised. From there, they should consider how an automated feature in one of their cases may change the legal analysis.

“Then lawyers need to think about how it will impact their practice. If your practice is very dependent on run-of-the-mill automobile crashes and then a new technology is introduced, it’s going to significantly reduce the number of vehicle crashes and impact,” he said.

“Lawyers that depend on things like automobile crashes or certain traffic offenses likes DUIs, need to start thinking critically about what impact these vehicles will have on their practice.”

What’s available?

Currently, the only Level 4 vehicles available for use are owned by Waymo, which stems from Google’s parent company, Alphabet. It offers driverless ride-hailing services confined to one area in southeastern Phoenix, Arizona.

“Instead of having an Uber and person drive over to you, you could signal for a Waymo vehicle from a fleet that would pick you up and take you wherever you want to go,” Wittner said.

But in order to offer driverless rides, Waymo had to undergo the task of mapping every road and all of the potential conditions, Walker Smith said.

“The challenge for a company like Waymo now is duplicating it, figuring out how to scale up a deployment like that to all kinds of other communities and conditions,” he said. “Cities with denser streets, with more pedestrians, with snow, with more potholes, with different traffic rules — all of these things that had to be very slowly and carefully learned by or in some cases programmed into Waymo vehicles. It’s kind of an open question with the history of how scalable that approach will turn out to be.”•

Please enable JavaScript to view this content.